Tech Recruiter Tries Coding pt. 2 — Google Apps (Type) Script

UPDATE: Part 3 is out!

UPDATE 2: Check the sequel! Recruiter Codes Chrome Extension — A LinkedIn Fairy(-tale)!

Hello again from the world of amateur-ish coding! 😄

In the first part of this now-serial blog, I talked mostly about how to automate recruitment statistics in Google Sheets. But statistics, even when classifying lots of data with lots of rules, are rather trivial in coding terms.

To get into something slightly more advanced, I’ll venture a bit out of the Recruitment domain, and get into what’s usually called Lead Generation.

Retro-engineering the over-engineered world of Lead Generation

For a company that, like FusionWorks, offers awesome software development services, a “lead” is actually quite related to Recruitment:

- A job post for a software development role, since it’s a need it can fulfill.

- Contact details for relevant people in the company that posted it.

- Info about the job, the company, and its people, to classify the lead.

Now, most companies tackle this part of the business doing the following:

- Buying leads data, from companies that collect it in bulk, somehow…

- Importing them into some expensive CRM SaaS solution aimed at sales.

- In such CRM, have one page for each lead where you can change its status, comment on it, and have links for emails, websites, etc.

If it seems overkill, it’s because it is! The truth is CRMs are just fancy interfaces to do basic operations on a database. Actually the simplest of operations, in terms of the underlying logic: basic CRUD (Create, Read, Update, Delete.)

And about bulk leads data, let’s just say it’s some doubtfully accurate (and doubtfully legal, in many cases) stuff to populate the database with.

The best of databases without databases (and why this matters)

At its core, a (relational) database is nothing more than a collection of tables, with some codified relations between them.

It might seem reductive, but if you add some validation rules and scripts to sheets on Google Sheets or Excel, where each sheet can be the exact equivalent of a database table, what you get is a relational database, but one that even people without database experience can work on, and with a UI that can be as fancy and usable as that of CRMs, if not more…

A single, simpler, much cheaper solution can kill two techs/products! ✌️

Reasonable people might disagree, pointing out that I oversimplified databases, and didn’t account for the fact that databases will always offer more possibilities, and be faster, compared to spreadsheets acting as databases.

These would be valid counterpoints if not for a few details:

- For Lead Generation, the extra PROs of databases are largely unneeded.

- Perhaps worse than that, direct database usage (without a CRM as a frontend) would raise the learning curve a lot for Lead Generators.

- Using a CRM, you lose a lot of the flexibility and power proper of both databases and spreadsheets, AND you pay a premium for it!

While following courses on databases, it dawned on me that the only characteristic of databases one could really want for Lead Generation, is not even exclusive to databases, nor always applied to them: Database Normalization, in particular the first three “Normal Forms”.

Don’t bother looking these up unless you’re into this stuff: most explanations are crazily formal (pun intended). For us this translates mostly into avoiding duplicate contacts or leads: it could lead to bad mistakes in Lead Generation!

To achieve this and more. we could plan things in the following way:

- Multiple sheets (like the tables of a database) separating jobs, companies, and contacts, one row per entity.

- Each entity associated with a unique identifier, so we can delete doubles without relying on names and titles. [Important not only for contacts: so many different jobs in different places can be titled“JavaScript Developer”!]

- Some scripting to keep all of this together, and especially populate the database with updated info, without someone having to manually write or copy-paste tons of data, which is alienating as hell.

About the last topic, automatic lead data collection, I won’t reveal how we did it because it falls within the textbook definition of business secret… Suffice to say it requires specific research and coding: if you’d like to try it out, you can contact either me or FusionWorks, and we’ll be happy to help!

But about the rest, I will talk about some examples, and about how it’s all easily developed from the comfort of VS Code, using… TypeScript!

⏭ Skip/skim the next section, if you’re not into coding! ⏭

Going TypeScript without even knowing much of JavaScript?!? 🤯

Having started to code this stuff using the regular Google Apps Script web IDE, it took me some time and courage to switch to VS Code, and even Git: it’s the stuff of real developers. Very far out of my comfort zone!!

But many creators on YouTube that talk about Google Apps Script (usually abbreviated GAS) were endorsing this method, and as an avid consumer of these videos, I decided to give it a try, and… Oh boy, was I missing out!

The trick is using a tool Google made, called CLASP (for Command Line Apps Script Project), which not only allows for pushing and pulling to/from GAS seamlessly from VS Code, (or whatever other IDE you like to use, I guess) but also automatically transpiles TypeScript code into the best JavaScript GAS can use. This is HUGE, even for a noob like me. Why?

I had just started using TypeScript to build a custom Google Chrome extension (topic for a later post in this series I guess, even if chronologically it came before this), and what I noticed is that, by simply changing JavaScript files to TypeScript on VS Code, the main difference is you are forced to be more precise, which avoids running into stupid errors!

Therefore, to my surprise, it is actually easier to code in TypeScript than JavaScript, even more so for a beginner like me, because:

- There is not much to “unlearn” from JS, if you just started anyway.

- You’re alerted when values can be of a type that would cause errors.

- You’re forced to do something about it, basically debugging in advance.

Therefore, from now on, all the code I will share will be GAS, but written in TypeScript, even if most of the time there will be little to no difference.

⏭ Skip/skim the next TWO sections, if you’re not into coding! ⏭

Of batch-extracting words from webpages, and ancient coding…

Say you have a lot of leads you “magically” get every day (ask in private 🥸) into your Google Sheets, but you want to know at a glance what’s there, without even opening the link. And say these links are to job posts, so this at-a-glance info you want, is the skills the jobs require.

Since there’s no standard format for job posts, you need to parse the webpage word by word, and extract the words looking like skills—possibly counting how many times each is mentioned, to see which is more or less important.

Now, since I learned the basics of programming in ancient times, and then completely stopped until recently, I always tend to think the tasks I give to my code could be too big, and will make the application/script too slow… 🙄

I know GAS even has a limit for how long a script can run, so if I hit it, the results might not arrive, or data might get corrupted! So performance was my top concern: how would I give such a huge task to this thing, looking at the text of so many pages, without risking slow-downs..? 🤔

It may be more obvious to you than to me: in 2022, this way of thinking of mine is completely unjustified, and outdated!! 😅 It’s totally possible to split even a long webpage in all of its words, count the occurrences of specific words, and put the results into a Google Sheet note that magically appears next to the link, in less than a second per page!

With the knowledge I had when I wrote the first article, I would have probably tried to tackle this using a double array of keywords and the number of occurrences, with some crazy nested iteration to go through it… 🤔

The fact JavaScript is object-oriented, and I could have used objects where key-value pairs constitute the skill words and their frequency on a page, would have seemed to my past self of a couple of weeks ago like a sure way of overloading the script and slowing everything down! 😱

Needless to say, it turned out even this concern of mine was archaic BS, and working with objects in JavaScript is not slower than working with arrays in any meaningful way, so using objects, code can become much more readable and less convoluted, without perceivable performance drops!

Batch-extracting skills from job posts: finally the code!

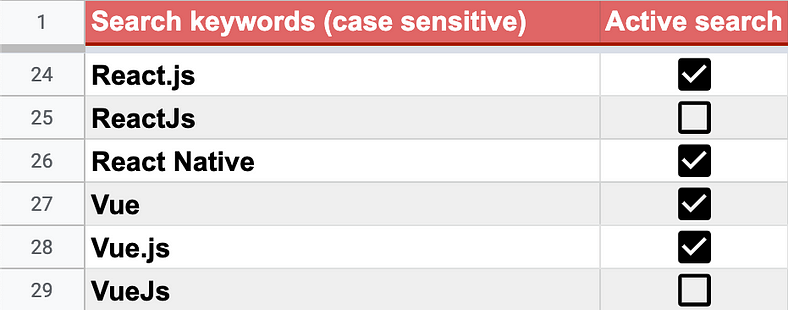

To begin with, I wanted a way to easily change the dictionary of terms I was going to search for with this custom function, without having to change the code, so that Lead Generators could do so without any coding. 🫶

Since we are on Google Sheets, this is as easy as creating a sheet with one word per row in one column, and maybe my beloved checkboxes in the next column, so that we can take stuff in and out of the dictionary without having to delete and rewrite, just by checking or unchecking the boxes.

Here’s the code that reads that sheet, and creates an array with each word:

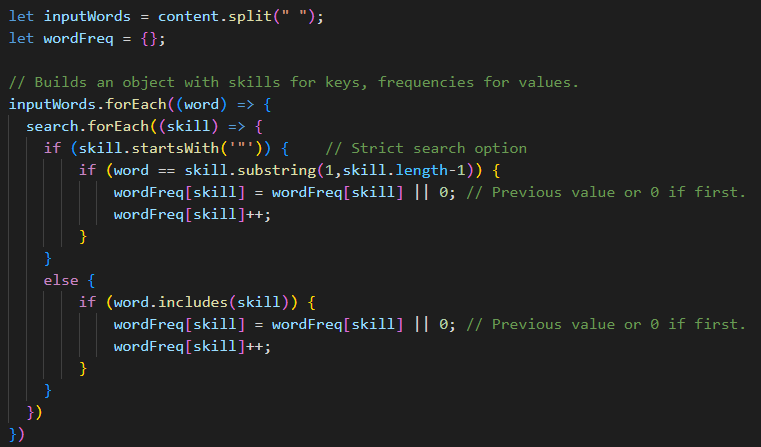

Then comes the nice part:

First of all it takes the function’s argument, content (the actual text of the webpage) and splits it into an array of words (inputWords) for easy parsing.

Then within the forEach loop, it builds the previously empty wordFreq{} object, adding keys named like the words/skills found, and associating to them a value that builds up to the number of times the word is found.

Note that this is done with a nested forEach loop on the previously built array of keywords to be found (called “search”), and works in two ways: if the search term is listed in double quotes, it will be found only if it’s a stand-alone. If not, also when attached to other words/characters.

Useful because otherwise “Java” would always be included in every “JavaScript” job post… 😄 But not if we search for it with the double quotes!

I know, this is not perfect for every case: looking for stand-alone words will fail if even just a coma is attached. I could easily take punctuation out from the content before starting the search, but for now this works like a charm.

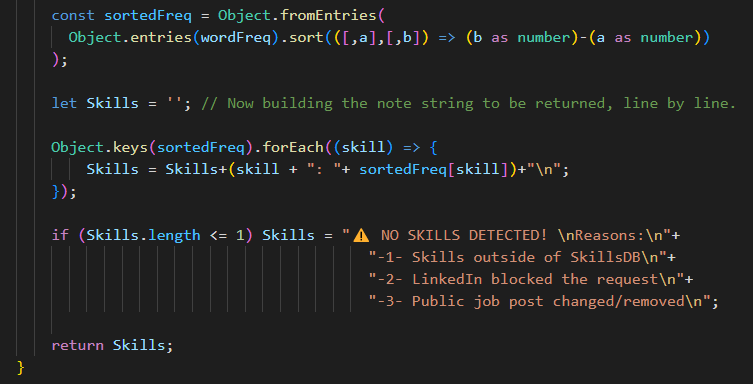

Finally, let’s make this something that can look nice in a Google Sheet note.

The first fancy line here sorts the key-value pairs within the object by the size of their value, to sort skills by frequency, instead of the order they’re found in. It breaks the object into an array, sorts it, puts it back together.

This is one of the things that my “ancient coding mindset” would consider cutting out, to gain some speed. After all, the most frequent keyword is usually found first anyway, and even if not, the higher number will make it pop… 🤔 But again: a couple of seconds is not much, while a consistent order of keywords by frequency is, so I decided to trust modernity!

After this fancy sorting, another forEach loop on the object’s keys makes us build a string that is formatted nicely, with line returns and everything.

Finally, if no words are found, we return another nicely formatted note to be attached to the job post, explaining possible reasons.

Here’s an output: the note on the right, popping up from the job column.

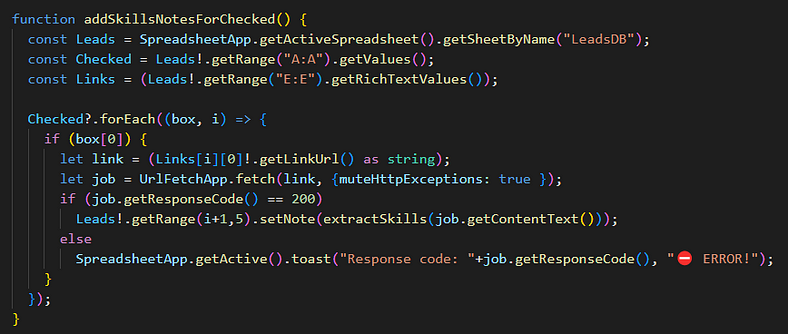

And here the function that does this to all rows selected with checkboxes:

Straightforward: looks at the checkboxes I added in each row to perform a “sticky selection”, looking at the links column only on rows where boxes are checked, and adds a note with “setNote(extractSkills(content))” on every entry where we get a positive “200” response code from the HTTP request.

You might find that your favorite jobs site blocks requests from Google Apps Scripts, and once again, if you want to know the secrets to make this work, contact me or FusionWorks, and we can set it up for you… For a price. 😏

Still, this is not perfect, I know. For example, I just noticed that reading and building the dictionary of searched terms within the extractSkills() function is very inefficient: it should be read only once, and passed to extractSkills(), to avoid re-looking at the dictionary every time a page is analyzed.

Finally an example of my ancient mindset being useful! 😎

P.S.: I tried, and indeed the the time was reduced by around 30%, down to 5.8 seconds to search/extract 40 keywords in 10 job posts! Sometimes thinking about performance pays. Out of curiosity, I tried taking away the sorting too, but no effect there, proving again that my ancient mindset needs an update! 😄

Lessons learned, AKA the only part worth reading!!

I really got carried away with the details above, so I hope the non-coders among you (and even most of the coders) skimmed till here.

In short: Google Apps Script written in TypeScript and managed with CLASP+Git on VS Code is an amazingly powerful thing!

I learned that while my concerns for performance sometimes lead to nice gains, they’re more often than not just fossils of my “90s computing mindset”, and I often tend to overestimate them: they surely don’t justify writing ugly code, or avoiding sophisticated stuff just to save some CPU cycles. The CPUs are Google’s anyway, and we use them for free! 😆

As long as the time it takes for scripts to chew your data is not too much for you personally, nor the MINUTES that Google puts as a limit, you’ll be fine.

This stuff saves HOURS of work, not seconds. But you shouldn’t spend hours writing “perfect” code. Keeping it clean is more useful!

I also learned you can easily make something better than most commercial CRMs, with the most important advantages of Databases (since CRMs are nothing but frontends for Databases, I can’t repeat it enough): you just need Google Sheets plus a few hundred lines of code, that are quite quick to write, since with spreadsheets there’s little abstraction: you can almost touch an array, if you’re ok with fingerprints on your screen… 😜

The most valuable lesson though, is something a bit abstract indeed, and I’m not sure I fully grasped it yet, so I’ll leave it to the next article, closing with a quote I remembered from my distant humanistic background:

We are like dwarfs on the shoulders of giants, so that we can see more than they, and things at a greater distance, not by virtue of any sharpness of sight on our part, or any physical distinction, but because we are carried high and raised up by their giant size.

— Attributed to Bernard of Chartres by John of Salisbury, circa 1159

It’s fitting on so many levels!

But for now I’ll close by thanking the best of the giants carrying “dwarf me” on its shoulders: FusionWorks! 😎

UPDATE: Part 3 is out!

Check the sequel! Recruiter Codes Chrome Extension — A LinkedIn Fairy(-tale)!