Tech Recruiter Tries Coding — A problem ChatGPT couldn’t solve!

Hello, and welcome back to this old “series”, that I resurrected for a “mini-episode”, rather disconnected from the rest.

If you’d like to check out the rest (pt. 1, pt. 2, pt. 3), it was basically a trip into my first coding steps with Google Apps Script, at first in JavaScript, then TypeScript, to automate stuff for recruiting, and lead generation.

More recently I wrote a Chrome Extension for recruitment (pt. 1, pt. 2) that basically gives an easy way to copy and sort data from LinkedIn and not only.

You can find it on GitHub too, but it won’t work without a web app hosted separately: if interested, let us know!

Diving into what ChatGPT could not “solve”, it’s also related to LinkedIn, and it’s in the context of lead generation. As mentioned previously, for a company like FusionWorks that offers great software engineering services, lead generation is a lot like job-hunting!

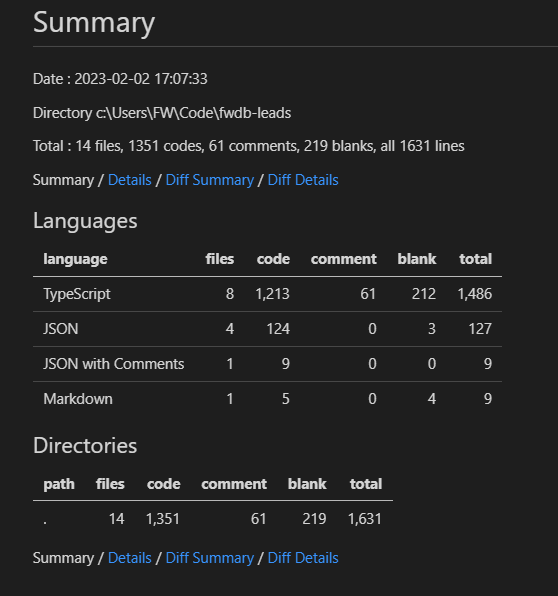

So with little more than 1200 lines of code, written little by little, I built a sort of personalized CRM that self-populates with job posts and related contacts. It’s all within Google Sheets, but indexed like a database.

Now, what problem could this system face, that ChatGPT might have helped me solve, but couldn’t? Let’s dive in!

The problem with self-populating spreadsheets is… Population!

Duh, right?

Yes, after a couple of weeks running fully automated, we had too many leads, and the issue was that while color-coding of dates would mark old leads, one would still be curious to check if they were still open or closed…

Easily solved, though: make a script that checks jobs’ status automatically.

Then you just periodically remove all the closed ones with another few lines of script, and the sheet over-population issue is gone!

Since at that time we were using only LinkedIn as a source, it was pretty straight-forward: just verify how job posts display the open/closed status, and parse for that element. (Although without querySelector or any of the DOM functions JS developers love, since Google Apps Script can’t access those.)

However, there are two problems with this:

- If you make the script check thousands of posts at top speed, the IP of the Google server hosting you will get automatically blocked by LinkedIn for excess of requests, so you will get no info after a few fetches.

- If you make it wait between each fetch a time random enough to simulate human behavior, and long enough to be meaningful, the script will require more time than what Google allows. (7 minutes, I think.)

You might think ChatGPT couldn’t solve this problem, but that’s not exactly the point. The thing is ChatGPT didn’t realise there was such a problem at all!

ChatGPT sees no problem at all, and that’s the problem!

I can’t produce screenshots of the replies, since ChatGPT spends more time at capacity than being usable these days, especially for old chats, but the thing is it was replying as if there was no execution time limit you can hit.

What it was suggesting, was to write the checking function, and then making it recursively call itself with setTimeout(), at randomized times.

Nope! the script has to run to keep track of time, so it would exceed the Google limit. Even if one would host this somewhere with no limit, it wouldn’t be very efficient: why having something running just to wait?

So I reminded ChatGPT of the time limit issue, and things got even funnier!

It now suggested I run the whole script with setInterval(), so the script would only run when needed… As if I didn’t need another script running all the time, to run a script at intervals!! 🙉

But I’m not telling the whole truth, here. 🤥

Truth is I knew the solution to this already, and just wanted ChatGPT to write the code… So in the end, I specifically asked code for the solution I wanted.

When you know the solution to a problem, it becomes easier. Duh!

What was needed was a script that would check a small amount of jobs, and then schedule its self-re-run via the Installable Triggers of Google Apps Script.

This way the execution time limit would never be reached, and you’d have enough time between fetches for LinkedIn not to consider it a problem.

With such a prompt, ChatGPT produced a quasi-viable solution: the code to check the jobs was not there of course (I knew it couldn’t know a LinkedIn’s job’s HTML structure, so I didn’t ask it), but the rest was ok, and so was the scheduling of its own re-runs.

It even got right the fact you’d need to use the Apps Script so-called PropertiesService to make it remember the last thing it checked.

Tip: if you build something like this, don’t use Document or User Properties, and go for Script Properties instead. This way you can see them in the project settings:

But again, it screwed up on something related to the context in which we were running the code. Such a function would have created a growing number of one-shot triggers that, if not cleaned up manually (one by one) would make you reach the limit of triggers in a very short time.

But at this point, I stopped being lazy and just wrote the thing myself: I just needed something that cleaned up after itself, deleting the trigger that triggered it after installing the next trigger.

I ended up with this “Homunculus” function being a generic scheduler that cleans up after itself using the Script Properties you saw above for storage:

I just call it from the function that checks for the jobs status, put min and max seconds, and it will schedule a run for that same function at a random time between the provided ones.

Now through the “ticker system” I mentioned around here, giving status messages in sheet tabs, I always know when and what it checked last, and when it will work next, like this…

… Or if I wait because I wanna see the magic happening live… 😁

Like so! And that’s it with the topic.

Medium.deleteTrigger(oldArticle); Medium.newTrigger(newArticle);

Yes, it’s conclusions time, because Medium stats tell me people rarely reach half of my usual articles, so we got to cut… 🤣

In conclusion: even with very specific prompts, ChatGPT will make mistakes when you’re working in an environment that has lots of constraints.

It knows those constraints separately, but can’t apply them to code it generates, unless you basically ask a specific questions about them.

If I would have not thought of a solution myself, ChatGPT would have been completely useless here. But if you do know the solution and specify it in the prompt, it is a nice homunculus to write the tedious stuff for you…🧟

Next I’ll dive again into the Chrome Extension, and the web app I built to support it… Unless you have suggestions. Please do have suggestions!! 🤣

P.S.: I wanted to put screenshots of ChatGPT’s replies, but turns out it never saved those conversations, as I guess they happened before it had chat history… So I tried to replicate this, and now it gives the right answers straight away, down to the deletion of old triggers… Did my negative feedback teach it?

Were my prompts just terrible before? We’ll never know!